This is a true story of a day in the life of several software developers (one who proudly and regularly declares #IHATEHardware) and a hardware/networking professional, and one of our customers who will of course remain anonymous for obvious reasons. That said, I share this story of lessons learned and reinforced in hopes that this happens to no one else and that it encourages you to help others protect their data assets so they are not taken to the edge of losing their business.

My days normally start out around 8:00am because most mornings I like to sleep until something naturally wakes me up. Most days it is construction noise in the neighborhood, my wife’s alarm, or the dog, but on July 16th it was a phone call from Frank Perez who is one of my team mates at White Light Computing. It was a very early 6:15am. I was waking up out of a dream where I was in a stadium of people and there was an earthquake happening (probably something in the 5.0 range, which was kind of cool). In my dream my phone was ringing too. Surprisingly, I answered it and it was Frank who started talking about the details of an investigation he was conducting based on a slew of error reports overnight from one of our customers. Normally the error reports are related to the network failing, which is reported to the customer’s IT Director. But the error reports started early and were “not a table” errors. Frank connected to the server were the data was located and tried to open up the tables in the error reports. They failed to open up. Upon further inspection he found them encrypted, and in the folder also he found two files:

1) How_to_decrypt.GIF

2) How_to_decrypt.HTML

(Note: the instructions in the two files are not the same. The HTML made me quite nervous as it could have active content. I do not advise opening up this file in the wild just to be extra safe.)

Frank suspected that someone opened up and unknowingly installed Cryptolocker or one of the variants. This is the second time in a few weeks Frank has seen this at a customer site, but at a different customer (who literally had no backups). Based on the time stamps, Frank was guessing it started between 8:00 and 8:15pm, the night before. So it has been running for 10 hours. My experience and the research I have done on Cryptolocker was that it isolated itself to the computer it was installed on. This is the first time I’ve heard it jumping from a workstation to the server. The day was going downhill quickly.

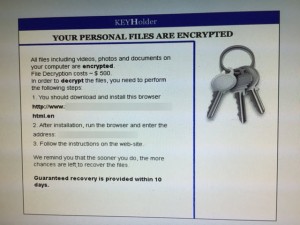

Here is an image of the How_to_decrypt.GIF:

Something you never want to see on your computer!

(I’ve blurred out a couple of things in case it will identify our customer)

This was not how I was expecting to start my Thursday. I formulated a plan to contact key people and then head into their office with Frank. I talked to the owner of the company who I learned was out of town and a couple of time zones away. I talked with the IT Director who was away on vacation to get the low down on the backups and where they were. I know that without the data people are going to be doing a lot of manual work, and most of the workers won’t even be able to do their jobs. Awesome news: a backup of the server is taken at 5:00 each day. Sounds like we might only be missing a few hours of data and the workers who are working between 5:00 and 8:00 are using the apps with SQL Server and not the DBFs so things are really sounding like it might not be as bad as I originally considered.

For those who have not been introduced, Cryptolocker (aka Cryptowall, CryptoOrbit, and Cryptolocker 3.0) is ransomware and it is not fun at all. I have seen this too many times in the past couple of years at customer sites. Although it behaves like one, this “software” is not a virus; it is a root kit that establishes itself on the computer. It installs itself via socially engineered email attachments that can fool even the savviest of computer user who know better. The software installs via a link from the Internet. It then calls home to get a key and begins to encrypt files with predefined extensions, which started out as MS Office extensions, but it has been expanded (oddly, INI and XML are not on the list). Unfortunately Visual FoxPro data files fall into the list. The process encrypts the files one folder at a time. The first variant of this software stuck to the local computer. So if someone opened the attachment and followed the link only one computer was affected. Still, for some of our customers, this can be bad enough depending on the computer that gets hit. But this latest variant now hits mapped drives so files on a server or another computer in a peer-to-peer network can join in on the fun. And the performance is very impressive as it had all the files in the data folder on the server encrypted in less than 20 minutes.

I learned Thursday from someone who recently tested six of the most common anti-virus and malware programs, not a single one found it on an infected machine. The day gets worse.

There are two ways to get your files back: restore from backup or pay the ransom and decrypt the files using the key returned from those holding them hostage. If you have good backups, it might not be too bad depending on the timing of the backups. I was thinking it would not be a problem as there are daily backups and we had the most recent a few hours before the attack.

So back to the 7:00am hour, I’ve contacted a couple of people on my team who helps support this customer, the key players at the customer site and headed into the office.

Once at the office we met with the newest member of the team who is the new hardware/networking tech for our customer. Frank explained his findings and our hypothesis. The tech has recent experience with the newest variant of Cryptolocker, confirmed Franks conclusion, and gives us the low down on what has happened, how this ransomware works, and what we need to do.

Developing the plan of attack:

- Disconnect each computer from the network in case of propagation. Kill the wireless so no laptops and other devices could connect to the network.

- Search each computer for ransom files starting in the room that was working around 8:00 last night to find the computer that is doing the encryption (“patient zero”) .

- Remove the computer from the room.

- Verify problem really is what we hypothesized.

- Determine the damage on the workstation and the server.

- Step back and develop the recovery plan

The approach, the collaboration, the planning, and the implementation of the plan reminded me of how firemen approach a fire. If you follow a fire truck to a fire you are likely to witness something that at first seems disturbing. The truck stops and the fireman get out. They are not running around. They are methodically executing a plan, which to the common person might seem to be working at a slower pace than is needed to get the fire out. As the fire rages in the building, the fireman get their gear, strap on an air tank, they put ladders up and get on the roof, they pull the hoses off the truck, they attach to the fire hydrant, put on their air masks, some start cutting holes in the roof and others start throwing water on the fire. Often the fire is out in short order. It is because of the planning and training, and implementation of the plan that things work so well. This is how we worked to find the troubled computer and determine how to get the customers back to work.

Finding the machine that installed Cryptolocker turned out to be simple as all we had to do is search for the file names above on the C: drive, and possibly other drives on the computer. In this office there are close to 50 computers, so the tasks took a little time with three of us unplugging and searching. We found the troubled computer pretty quick. Murphy’s Law would have dictated locating it show up on the 50th computer, but instead it was one of the first.

The fact is: we considered paying the ransom to get the server back to normal. The people cost involved to rebuild the server and restore the files was much more than the ransom. Obviously one has to understand the ramifications of giving money to the criminals. But what if it was necessary? I’ve talked to several of our customers who have been hit and several other colleagues who have customers, who have been bitten, and sometime the backups are not good enough and the money needs to be paid to stay in business. It is these kinds of moral dilemmas that can keep one up at night.

We started looking into it and really thought through the process to the point of getting a spare laptop and potentially sacrificing a MIFI device to get to the hacker’s Web site and instructions. We did not really know if something that connects would get infected and to the potential affects it can have on the hardware used. Even the thought of searching and connecting to something like the FBI site in search for keys was scary to me. Who knows what fake sites could be setup. We also have read and heard that Cryptolocker can get installed just by visiting a URL. So we did not take any chances. Before we got started, we realized that the ransom note stated a 1 to 10 day turnaround on getting the data back. We were not sure if this meant 10 days to get us the key, or 10 days for the solution to decrypt all the files it encrypted. Additionally, the ransom required bitcoin as payment, and getting bitcoin currency was new to all three of us. So we left that as the last resort option and moved forward with the better plan.

Second plan of the day:

- Determine the ransom and steps to pay it (last resort).

- Update the customer on the situation and explain the ransom, and what we need to do. Get permission to pay the ransom as a last resort.

- Build a new virtual server to replace the virtual server with the encrypted files. We wanted to leave the old server intact in case something was important in the restore of the new server.

- Restore backup from previous day to the new server

- Reconnect the workstations to the network, and test the systems

- Get home in time for dinner (not really in the plan, but if all went well…)

Rebuilding the server was not my thing (remember #IHATEHardware), but Frank and the networking tech don’t mind and get started. The IT Director has the Windows Server ISO and keys staged for us to use. Hyper-V and the ISO make short work of getting the server operating system installed. But low and behold the keys do not work. It turns out the server is R2 and we have keys for something else. We look for the proper ISOs and key combinations. We found a stash of DVDs with different versions. Several hours later, we download the proper ISO to match the existing virtual server and get it installed. Still enough time to get the backup restored and everyone home for dinner.

The backup is restored. We poke around and see quite a few files missing including DBFs, CDXs, FPTs, EXEs, DLLs. Some folders have all the data in the data folder, but are missing the EXEs in the application folder. Some folders have the EXEs, but are missing the runtime files. There was no obvious pattern.

The network tech dug into the backup software and came upon a revelation we restored a differential backup. Ah, perfect, so we have more work to piece the restore back together. First we have to find the last full backup and then restore the differentials after restoring the full. More work, but an easy enough plan of action. Our customer has four solid state drives rotated as the backups (fifth daily is on order to replace previous fifth one), each capable of holding 680GBs. Fortunately, earlier in the day our customer’s onsite developer requested the Controller bring the offsite drives back to the office in case they were needed. Perfect, a plan was working. Then the new networking tech delivered news that was about as devastating as Frank’s original find of Cryptolocker. The the last 16 days backups were ALL differentials. He could not find the last full backup.

I placed a call to the owner to explain the situation, and a second one to the IT Director who explained where to find the full backup. Unfortunately what he pointed us to was the differential backup we used. You could feel the room deflate. As you can ascertain, we effectively have no backup. Holy cow. My stress level just raised up a notch. Earlier in the day the IT Director told the owner there were three options:

A) Restore the backup

B) Pay the ransom

C) Pack it up and go out of business

Going back in time…

Many years ago when we needed a test data set we would ask the previous IT Director and she would give it to us a day or two later since she had to restore from tape. The restoration process was a pain in the neck and resource intensive. So to help us out I asked Frank to develop a rudimentary backup process to run nightly at midnight. This process copied key files to a folder on one of the computers that is not the server. It was never intended as a full backup or part of the disaster recovery process. From time-to-time the old IT Director would recover files we backed up because it was quicker than the restore from tape. We benefited from this by grabbing the backup for our test machine.

One of our contractors happened to be in the office on Tuesday and grabbed a copy of the data from Monday night’s backup for some testing he needed to work on. He does this every so often when he is in the office, but he is not there every day and has been known to take long vacations. Earlier in the day I asked him to secure that backup just in case it was needed, but not expecting to ever need it.

A few years ago I requested a test machine to create an isolated environment for the customer to test our application changes. The owner has so much faith in us that he prefers to test in production. We know better and never have that level of trust in ourselves. After many requests and some serious push back and flack from the current IT Director, we got a test machine, which is a different VM in Hyper-V. The last major testing we did was last August. But at that time we had refreshed the entire VM from production.

Back to solving the problem…we knew we had more options than the IT Director.

- Restore the backup

- Rebuild the backup from Tuesday, restore previous night, and leverage the test machine.

- Pay the ransom

- Start with a baseline from last August from test machine

- Absolutely no talk of going out of business, yet

Our biggest concern was that our backup from the night before was taken four hours after the encryption process started. But one thing the Cryptolocker cannot do is encrypt files that are open. It just so happens one or more people left an application or two open and had some very important files open. Mind you, corporate policy states the employees close all the apps before leaving for the day. So, because someone violated corporate policy, our backup was able to back up some really important files. Sure, these files would have been on the nightly backup from 7 hours earlier, but we had even fresher data.

We ended up implementing plan B and it worked. We restored the Tuesday backup. We restored the previous night backup and we restored our midnight backup. Still, 77 DBFs were not restored. We used Beyond Compare to help determine the missing files (thank you Scooter Software for the best file/folder comparison software around). It turns out that many of the tables were static, some temporary, and some could be rebuilt or ignored completely. We used Beyond Compare to move over the missing files from the test machine to the production server. The three of us then grabbed the remaining files like the latest EXEs and runtime files from our machines to fill in the gaps.

Sure, it is not perfect as some of the data was from August of last year, but we know that we have all the key things covered and the core data is the latest and greatest.

I texted the owner the good news and told him I would be in the office before they opened for business on Friday. We left at 10:30pm.

Friday had a few glitches here and there (mostly because we missed some of the Visual FoxPro Reporting APP files) and a couple of machines that relied on the wireless access could not be used until we checked out all the laptops that were coming in from the satallite workers. The only machines affected were patient zero and the file server.

Lessons to reinforce/learn:

- Backup, backup, backup,

- Full backups are better than differential

- Differential backups rely on a full backup.

- Test the backups

- Have multiple generations of backups

- Multiple kinds of backups (daily, weekly, monthly)

- Multiple storage methods for backups (disk, mobile disks, offsite and onsite, cloud)

- Review the processes and the disaster recovery plan periodically.

- Refresh the test machine with production on a more regular basis.

It pays to be lucky

We absolutely lucked out this week. We lucked out because our contractor was in the office on Tuesday and grabbed a backup. He easily could have been on vacation like so many people this time of year. We lucked out because we solved a pain point years ago to create this backup in the first place. We lucked out that Frank and the new network tech had some recent experience with Cryptolocker. We lucked out the network tech is very bright and works well with the development team (IT Support and developers do not always get along in my experience). We lucked out we have a test machine that had the rest of the files. We lucked out that one or more employees violated corporate policy and had the apps open, which normally gives you fits trying to back up file. We lucked out our backup process has the intelligence to back up open files. We lucked out that our customer had faith in us. We lucked out that we could deliver a working data set. Our customer lucked out that he is back in business so quickly.

I mentioned that our customer had faith in us. He told me on Friday that his IT Director did not think we would be able to fix this. His daughter, who works in IT at a local community college, did not think we would be able to pull the Phoenix from the ashes. I explained to our customer, from time-to-time during my career we have relied on pulling off an “IT Miracle” and each of us are limited to the number of miracles we can pull off. This past week I used up another one. Yes, there were other options, but each of those options is not as good as the ones higher up on the list and each of the other ones had higher costs to the business and long-term ramifications. And one of the options meant giving money to criminals, which is a decision you cannot put a price tag.

The real sad thing about this is there is no protection from it happening again. In fact, it could have easily had more than one computer attacked. The same email could have been opened by more than one person. The same email could arrive tomorrow at the office, and is certainly being delivered each day to other people around the globe as you read this post.

Thanks for taking the time to read our story of how one company went to the brink of disaster and survived to talk about it. I hope the lessons learned and lessons reinforced trigger action on your part to review the disaster recovery plan. If there is no plan, I hope you take the time to make one. Also, take the time to discuss this with your customers. Leave no one behind.

To the entity in charge of my count of “IT Miracles”, please grant upon me double the count I have remaining today. I’m certain this won’t be the last time I need to count on one.

Thanks to everyone who helped out that day. The teamwork was amazing! I never have to be reminded of how great a team we have at White Light Computing. Last Thursday the team shined brightly. We also have a great customer and a new found friend (the networking tech) who I look forward to working with for many years to come.